AI: Triumph of hope over reality

Dr. David Millhouse

Thu 20 Feb 2025 3 minutesIs AI truly Disruptive?

Optimists argue that Artificial General Intelligence (AGI) will serve as a steppingstone toward Artificial Super Intelligence (ASI), with some claiming ASI could emerge within “a few thousand days.” However, this enthusiasm contrasts sharply with current technological constraints.

Recent benchmarks reveal that larger, costlier LLMs consistently outperform smaller models, with one achieving a 75.7% success rate on tasks that cost $20 each to process. However, industries like law and finance, where precision is paramount, cannot tolerate such error margins. For them, even minor mistakes can spell the difference between solvency and collapse.

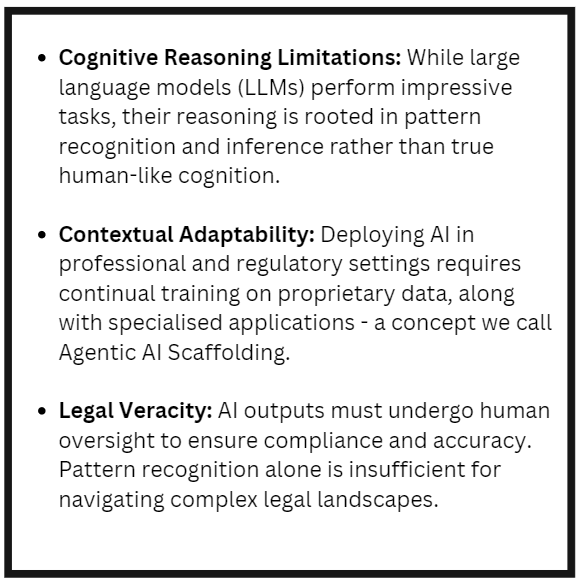

The adoption of AGI in professional environments remains constrained due to:

High operational costs,

Uncertain performance guarantees, and

The need for skilled oversight.

Regulation and Human Oversight: Non-Negotiable Necessities

As AI technologies evolve, regulatory scrutiny is becoming increasingly stringent. Governments, statutory bodies, and courts are actively crafting rules to mitigate risks. Unchecked AI deployments can result in data breaches, ethical violations, and regulatory infractions.

For organizations, robust internal protocols and staff training have become critical for:

Ensuring compliance,

Attracting and retaining talent, and

Maintaining legal defences.

Case studies from ActiumAI illustrate the stark difference between effective and ineffective AI integration:

Success: Properly implemented Agentic AI Scaffolding enabled productivity gains of 4 to 545 times.

Failure: Deploying GPT models without skilled oversight led to inefficiencies as irrelevant outputs required extensive human correction, costs and legal risks.

Key Areas Requiring Attention:

Data Security and Privacy: Concerns about sensitive data being uploaded to external servers highlight vulnerabilities akin to “Swiss cheese.” Cybersecurity and data protection are paramount, with significant liabilities for breaches.

AI Hallucinations: LLMs often fabricate details (“hallucinate”), presenting them as facts. This is particularly risky in legal and regulatory contexts.

Professional Rejection of AI: Many organizations, wary of such pitfalls, have banned GPT-based tools from their workplaces, perceiving them as uncontrolled “chatrooms” with the potential for misinformation and compliance breaches.

Monetizing AI Technologies: Beyond the Hype

The hype surrounding AI often obscures its real value. While some organizations tout success, surveys reveal mixed outcomes:

58% of Australian companies investing in AI reported unmet expectations.

70% of investors exited AI-focused deals after early disappointments.

The lesson? Success hinges on leadership and execution. While developers create ground-breaking technologies, it takes visionary leaders to turn innovations into sustainable businesses.

“Good ideas and good products are a dime a dozen. Good execution and good management – in a word, good people – are rare.”

Australia, in particular, faces unique challenges. A history of unprofitable ventures in the tech sector has made it difficult for the country to replicate Silicon Valley’s success. However, there’s reason for optimism. Non-zombie AI companies in Australia are experiencing exponential growth, repeatedly doubling their valuations. These firms, capable of addressing real-world problems and generating sustainable revenues, are expected to lead the next wave of investment.

Disclaimer: This article is prepared by Dr. David Millhouse for educational purposes only. While all reasonable care has been taken by the author in the preparation of this information, the author and InvestmentMarkets (Aust) Pty. Ltd. as publisher take no responsibility for any actions taken based on information contained herein or for any errors or omissions within it. Interested parties should seek independent professional advice prior to acting on any information presented. Please note past performance is not a reliable indicator of future performance.